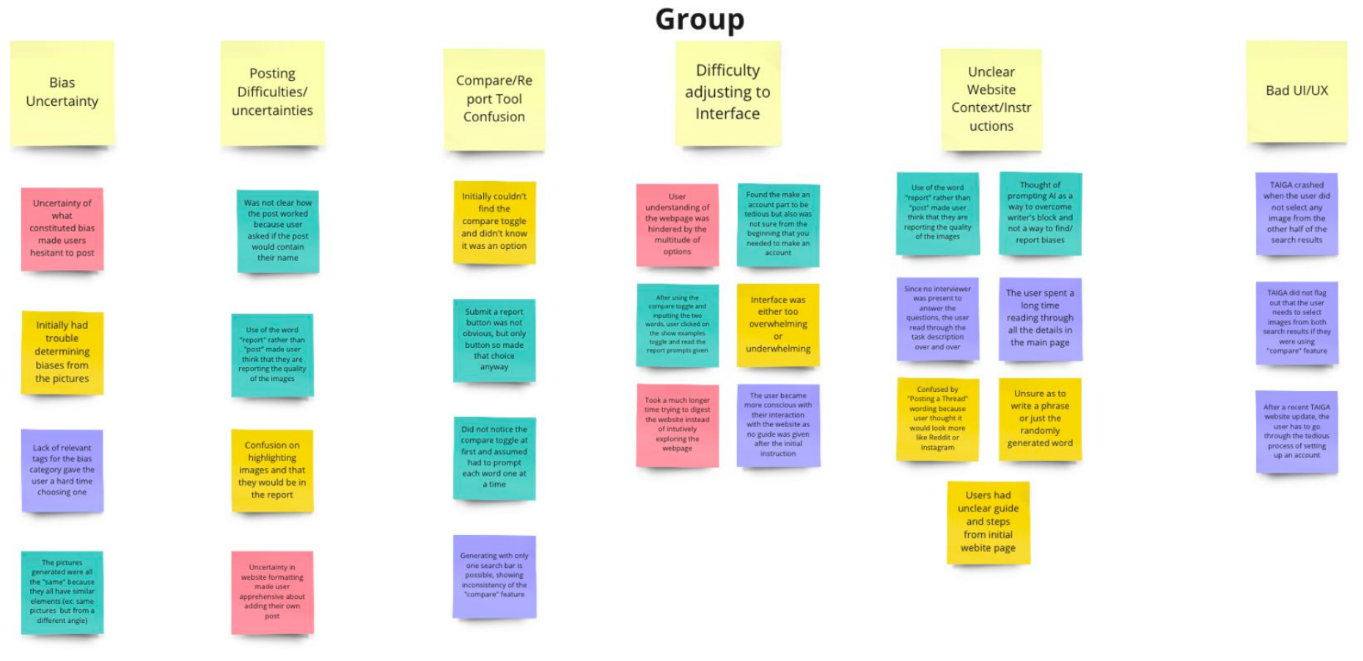

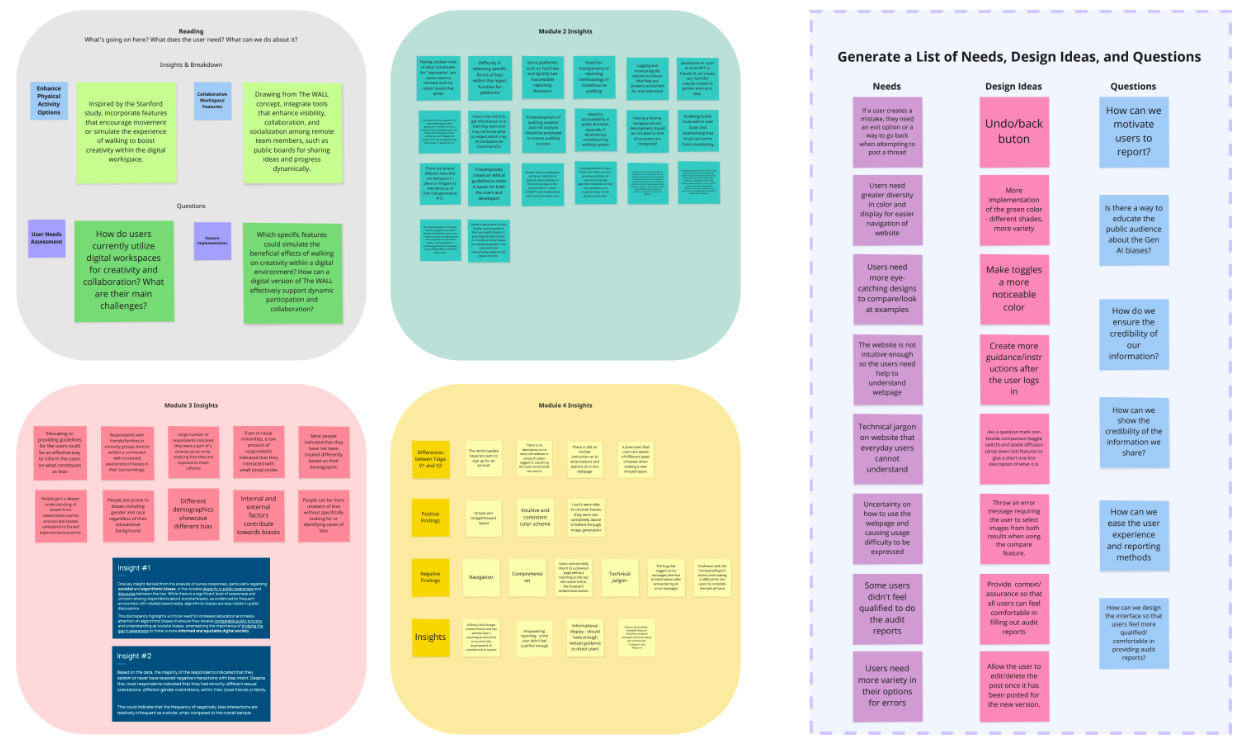

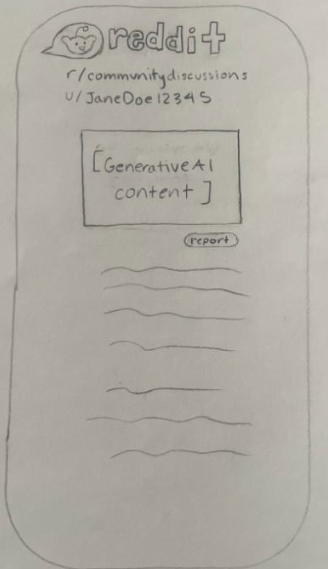

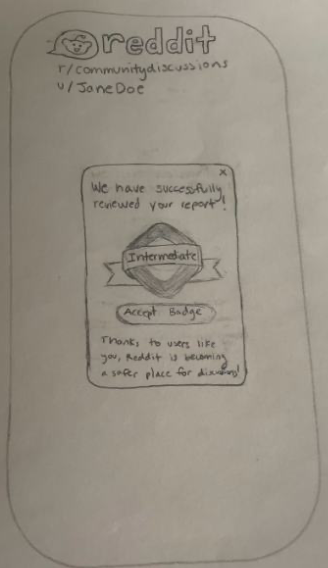

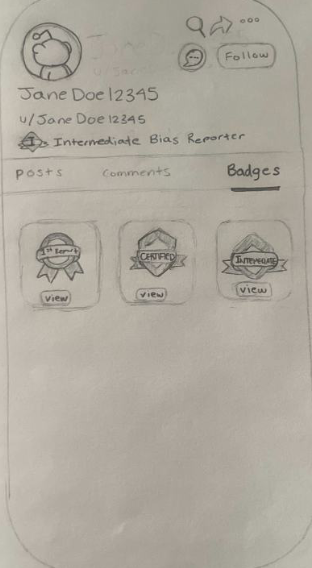

Gamifying Bias Detection

REDDIT • Project

Tools ~ Figma, Miro, Google Suite

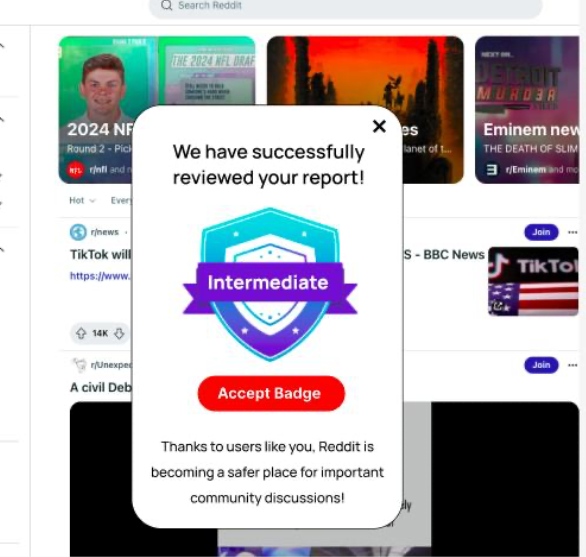

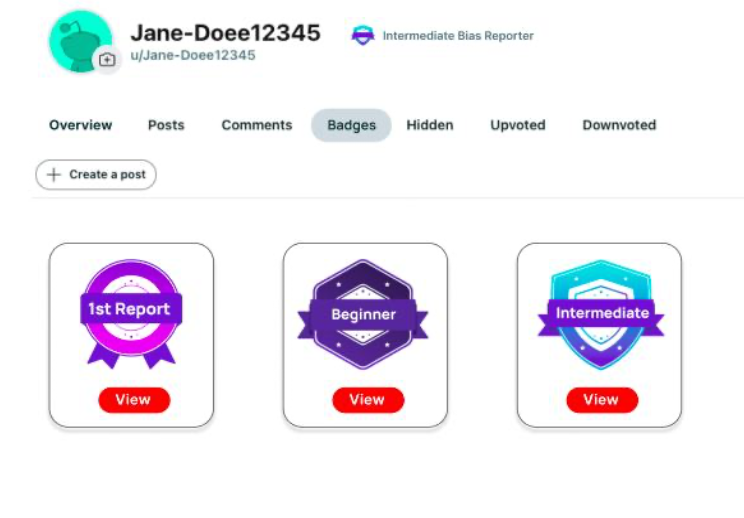

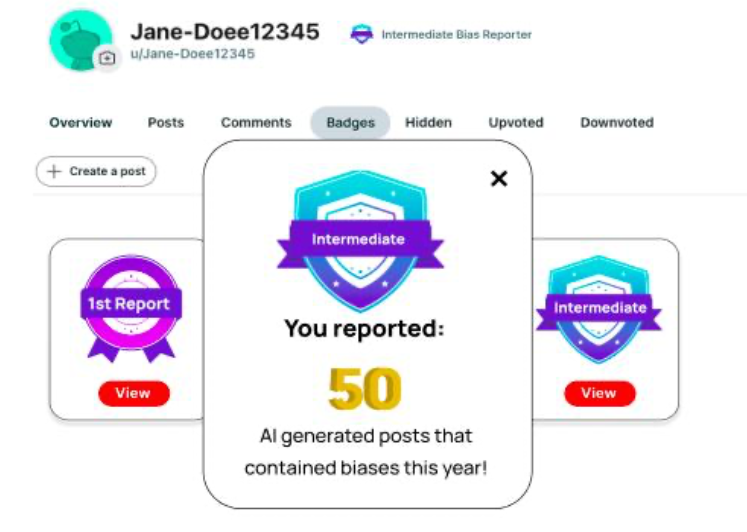

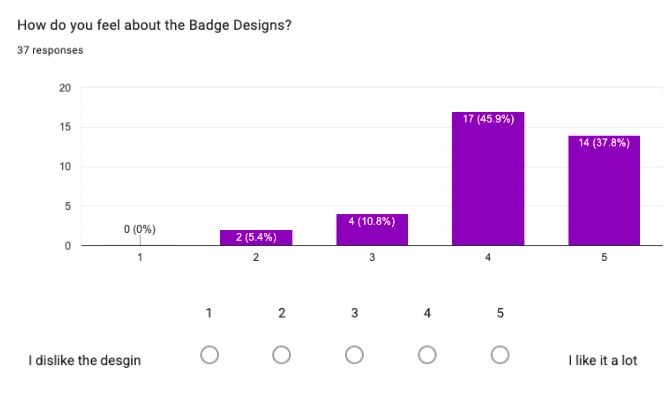

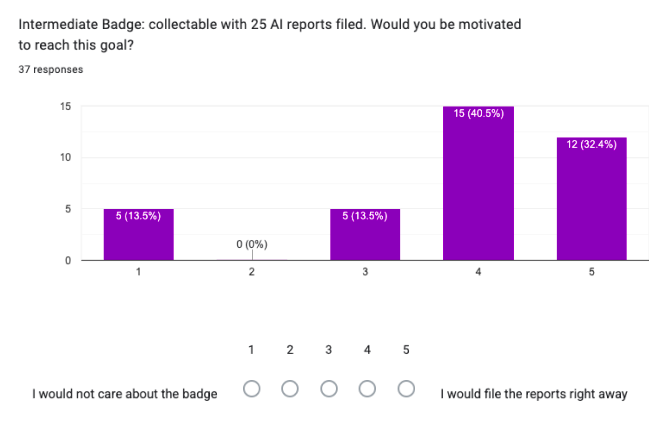

Encouraging everyday users of open discussion forums to audit and report AI biases through gamification.